Who’s Liable When AI Makes a Medical Mistake? Understanding Medical Liability

From improving diagnoses to assisting with surgery, AI tools are becoming everyday tools in hospitals and clinics. While these technologies promise faster, more accurate care, they also raise a big question: Who is responsible when something goes wrong?

This article breaks down the evolving legal landscape of AI in medicine, exploring who could be held accountable when AI systems cause harm—and what steps can be taken to minimize liability and protect patients.

The Power and Perils of AI in Medicine

AI is especially useful in fields like radiology and oncology . It can spot complex patterns in scans or predict treatment outcomes. During the COVID-19 pandemic, AI also helped accelerate research and decision-making. On a broader scale, AI can help hospitals plan resources better and reduce human error.

But with this progress come challenges, particularly when things don’t go as planned. If an AI tool misdiagnoses a patient or leads to a harmful treatment, who is accountable—the doctor, the hospital, or the AI developer?

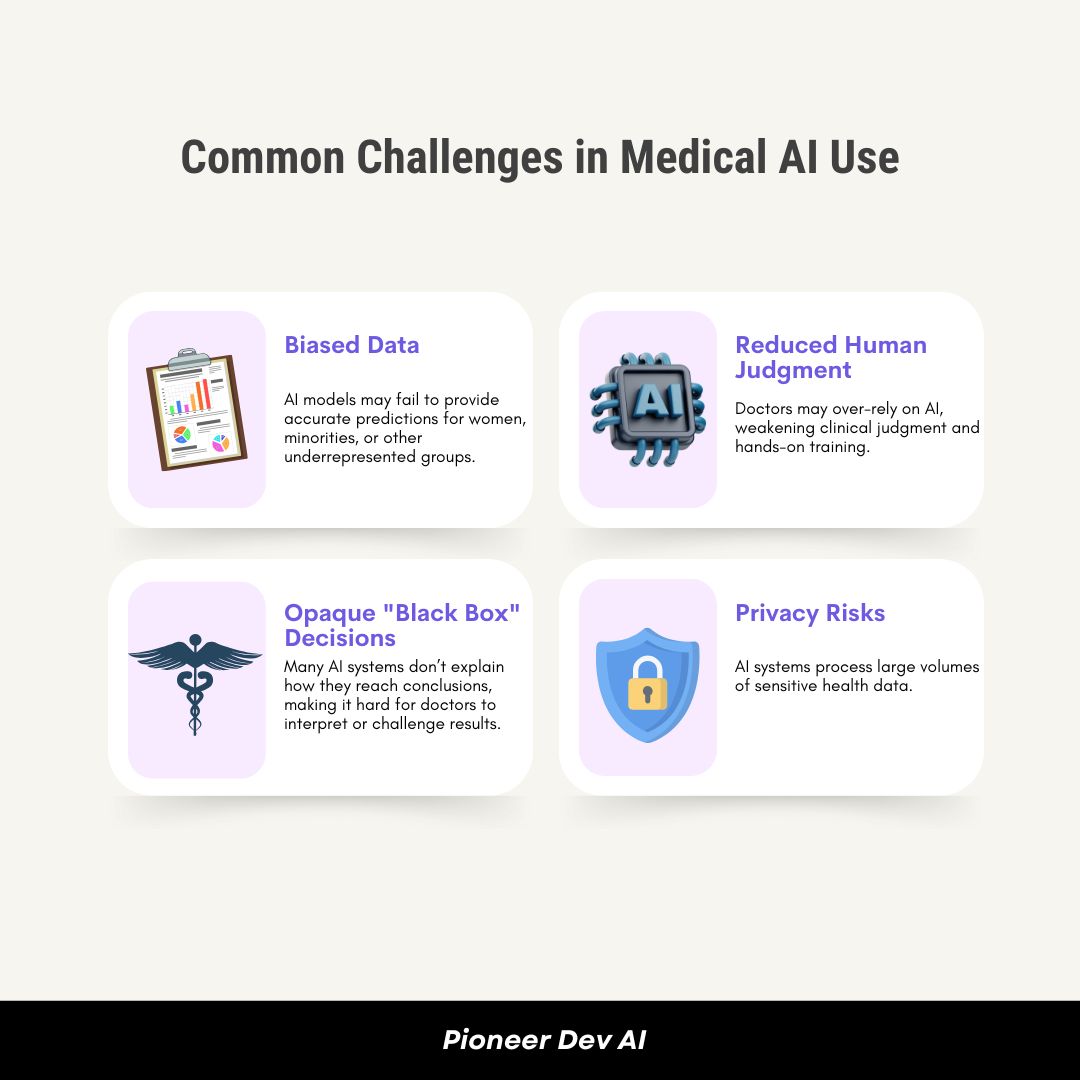

Common Challenges in Medical AI Use

Let’s look at four major concerns with AI in healthcare:

1. Biased Data

AI learns from historical data. If that data lacks representation from diverse populations, AI models may fail to provide accurate predictions for women, minorities, or other underrepresented groups.

2. Reduced Human Judgment

Doctors may over-rely on AI, weakening clinical judgment and hands-on training. The doctor-patient relationship could also suffer if empathy and human intuition take a backseat.

3. Opaque “Black Box” Decisions

Many AI systems don’t explain how they reach conclusions, making it hard for doctors to interpret or challenge results. This complicates informed consent and reduces accountability.

4. Privacy Risks

AI systems process large volumes of sensitive health data. Protecting this information from cyberattacks and misuse is essential.

So, Who’s Liable When AI Goes Wrong?

Right now, liability for AI-related medical harm is still in legal limbo. However, courts and experts have explored a few possibilities:

- Doctors could be liable if they blindly follow AI recommendations without applying their own medical judgment.

- AI Developers might be responsible if the software fails due to a design flaw or faulty algorithm.

- Hospitals could be held accountable under “vicarious liability” if the AI system is considered part of their team.

- Product Liability laws may apply if the AI tool itself is considered a defective product.

- Some theorists even suggest giving AI tools legal personhood—but that’s still science fiction (for now).

- A new legal concept proposes treating developers, doctors, and hospitals as a shared enterprise, splitting responsibility.

Key Legal Hurdles in Holding AI Accountable

According to a comprehensive analysis by Stanford University’s Human-Centered AI Initiative , there are several reasons why suing over AI-related injuries is especially hard:

1. Lack of Case Law

Few lawsuits involving AI in healthcare have reached court decisions. Most judges are still relying on outdated legal frameworks meant for physical medical devices—not complex software.

2. AI ≠ Traditional Products

AI isn’t a tangible object, so product liability laws don’t always apply. Courts are unsure how to handle software that thinks and learns on its own.

3. FDA Preemption

If an AI tool is approved by the FDA, it may be immune from state-level lawsuits. This limits a patient’s ability to sue for harm caused by regulated tools.

4. Burden of Proof

To win a case, patients must often:

- Prove a design defect

- Show the injury was foreseeable

- Present a safer alternative design

With AI’s “black box” nature, these tasks are extremely difficult.

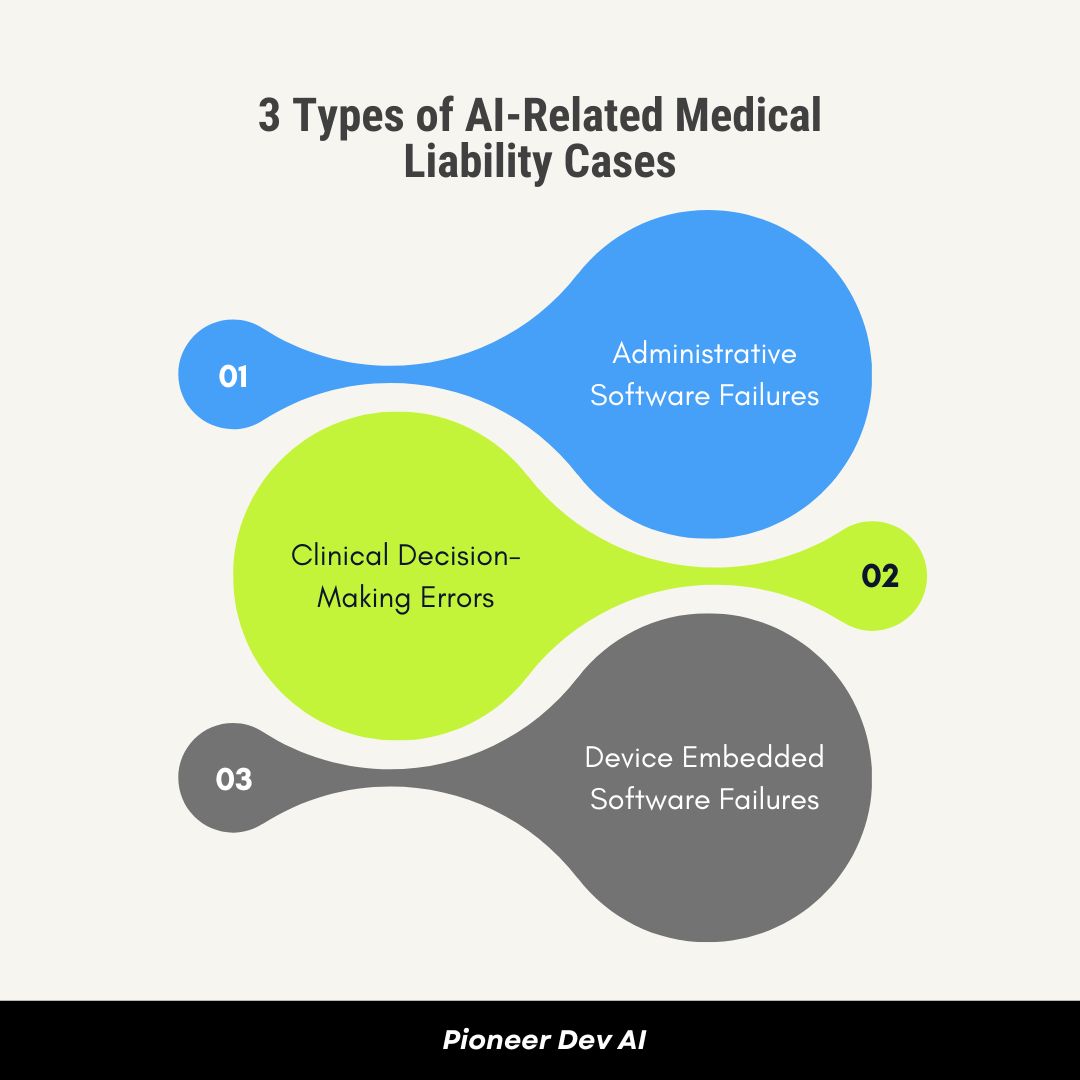

3 Types of AI-Related Medical Liability Cases

1. Administrative Software Failures

- Example: Drug scheduling software causes medication errors due to a confusing interface.

- Liability: Hospitals and developers may be sued for not maintaining or updating the system.

2. Clinical Decision-Making Errors

- Example: AI misclassifies a heart patient as “normal,” leading to a fatal delay in treatment.

- Liability: Doctors may be sued for relying on faulty AI, while developers may be targeted for flawed design. Courts are split on how to handle these cases.

3. Device Embedded Software Failures

- Example: A reprogrammed infusion pump delivers a lethal morphine dose due to human error.

- Liability: Plaintiffs must show specific design flaws—not just that “the AI failed”—which is a high legal bar.

How Healthcare Providers Can Reduce Liability Risk

Don’t Treat All AI Equally

Some tools are riskier than others. Treat them accordingly.

Evaluate Tools Based On:

- Likelihood of errors

- Ability for humans to catch mistakes

- Severity of potential harm

- Whether compensation is possible

Use Contracts to Shift Risk

Hospitals can negotiate:

- Indemnification clauses (e.g., developer pays if AI fails)

- Transparency terms (how the model was trained and validated)

- Minimum insurance coverage

Conclusion

AI in healthcare holds incredible promise. It can improve diagnostic accuracy, reduce operational costs, and ultimately save lives. But without clear rules on accountability, these innovations risk eroding public trust.

To ensure AI is both powerful and safe, doctors, hospitals, developers, regulators, and legal experts must collaborate to build a transparent and fair liability framework.

At Pioneer Dev AI , we’re committed to developing ethical, reliable AI solutions that empower healthcare providers while prioritizing patient safety. As the industry continues to evolve, we believe accountability should evolve with it.

AI is the future of medicine—but responsibility must grow alongside innovation.